Paving the Cowpaths: Why AI Coding Tools Feel Limited and What Comes Next

Walk through any old European city and you’ll notice something odd: the roads twist and turn at strange angles, doubling back on themselves, rarely taking the most direct path from A to B. Why? Because centuries ago, road builders simply paved over the trails that cows already walked. These “cowpaths” weren’t designed for speed, safety, or scale, but once paved, they became fixed infrastructure. Useful, but suboptimal.

The same thing is happening across the tools we use every day.

Word, PowerPoint, and IDEs were all built for an era when creation happened one unit at a time — word by word, slide by slide, line by line. These tools assume the human is the primary builder, carefully assembling content piece by piece.

But the unit of creation is changing.

We’re moving from writing documents to creating ideas, from building slides to designing presentations, from coding lines to building applications. The work now begins with intent — an ask or a goal — and unfolds through collaboration between human and AI. “Write the draft.” “Build the deck.” “Generate the app.” Together, we iterate, refine, and validate toward a finished outcome.

AI has made the old frameworks faster, but not smarter. They still constrain us to workflows built for manual construction, not collaborative iteration. Most AI tools are paving over those frameworks — adding automation to processes that were never designed for it — rather than rethinking what creation itself can be.

The Same Pattern in Software Development

The same dynamic plays out in software engineering.

Today’s AI coding tools are paving the cowpaths. They sit on top of yesterday’s workflows — typing code line by line, handing off pull requests, running tests — and add a thin AI layer to make each step faster. But just like winding medieval streets, these workflows weren’t designed for the world we’re entering. They weren’t designed for AI.

And that’s why, despite the hype, 95% of GenAI pilots never reach production, and Gartner predicts 40% of AI projects will be scrapped by 2027. The cowpaths are paved, but we still can’t get where we need to go. Developers and enterprises hit the same bottlenecks — context loss, shadow dependencies, failure to scale — because we’re optimizing the old cowpaths, not building intelligent highways.

The Developer’s Reality Has Changed

In 2025, over 90% of developers spend at least two hours a day working with AI agents, not typing out code themselves. Daily routines have shifted toward:

- Briefing AI agents with high-level goals and prompts

- Reviewing, supervising, and refining AI-generated output

- Validating code for scalability, safety, and compliance

But the tools remain relics of an earlier era. More than 60% of developers report that AI tools miss critical context during key development tasks like refactoring and test generation, and AI tools actually slow experienced developers by 19% when working with mature, complex codebases.

Fragmentation tax: In 2025, 59% of developers now juggle three or more AI coding assistants in their workflow, introducing new layers of complexity, context loss, and governance headaches, fueling a “cowpath” trap of serial, rather than coordinated, automation. Only 28% of dev teams confident in their AI code are those who operate with fewer, more integrated tools.

Beyond Speed: Imperfection in the Agentic Era

The next wave of AI platforms can’t just be faster; they need to be resilient. History shows us that the most transformative systems weren’t designed for perfection, but for imperfection.

The internet wasn’t built to avoid outages. It was designed to expect them — rerouting packets dynamically, keeping the system alive even when parts failed. That resilience is what made it the backbone of modern life.

AI is no different. Outputs will always be inconsistent, incomplete, or occasionally wrong. But in an agentic world, those imperfections multiply. A single misstep in a prompt, plan, or dependency doesn’t just cause a bug; it cascades across agents, compounding errors, burning cycles, and creating systemic fragility.

The next evolution of software engineering won’t come from paving faster cowpaths, but from building intelligent infrastructure that expects imperfection — learning, validating, and improving as it moves.

From Cowpaths to Agentic Highways

Every major leap in technology began when we stopped paving old roads and started building new ones. The GUI wasn’t a prettier command line; it redefined how humans interact with machines. The cloud didn’t just move data centers online; it reimagined how software scales and adapts.

Agentic engineering marks the same kind of inflection point. It replaces task-level automation with a new, continuously improving system of collaboration between humans and AI.

For enterprises, that shift demands new foundations:

- Capturing intent clearly: Moving beyond ad-hoc prompts to structured, adaptive, auditable plans.

- Transparent orchestration: Governing a coordinated team of AI agents, rather than adding another assistant to the mix.

- Continuous validation and optimization: Benchmarking and improving every cycle, because AI outputs will never be perfect the first time.

This is what it means to build agentic highways — systems designed for adaptation, not just acceleration. And the stakes are real, as most tech leaders expect agentic AI to reach full-scale adoption within three years, yet most current tools still pave the same old paths.

When AI agents lack a clear, validated plan — what to build, how to verify it, and what success looks like — they generate code without direction. The way forward isn’t faster cowpaths. It’s building systems that evolve purposefully — planning, validating, and improving with every iteration.

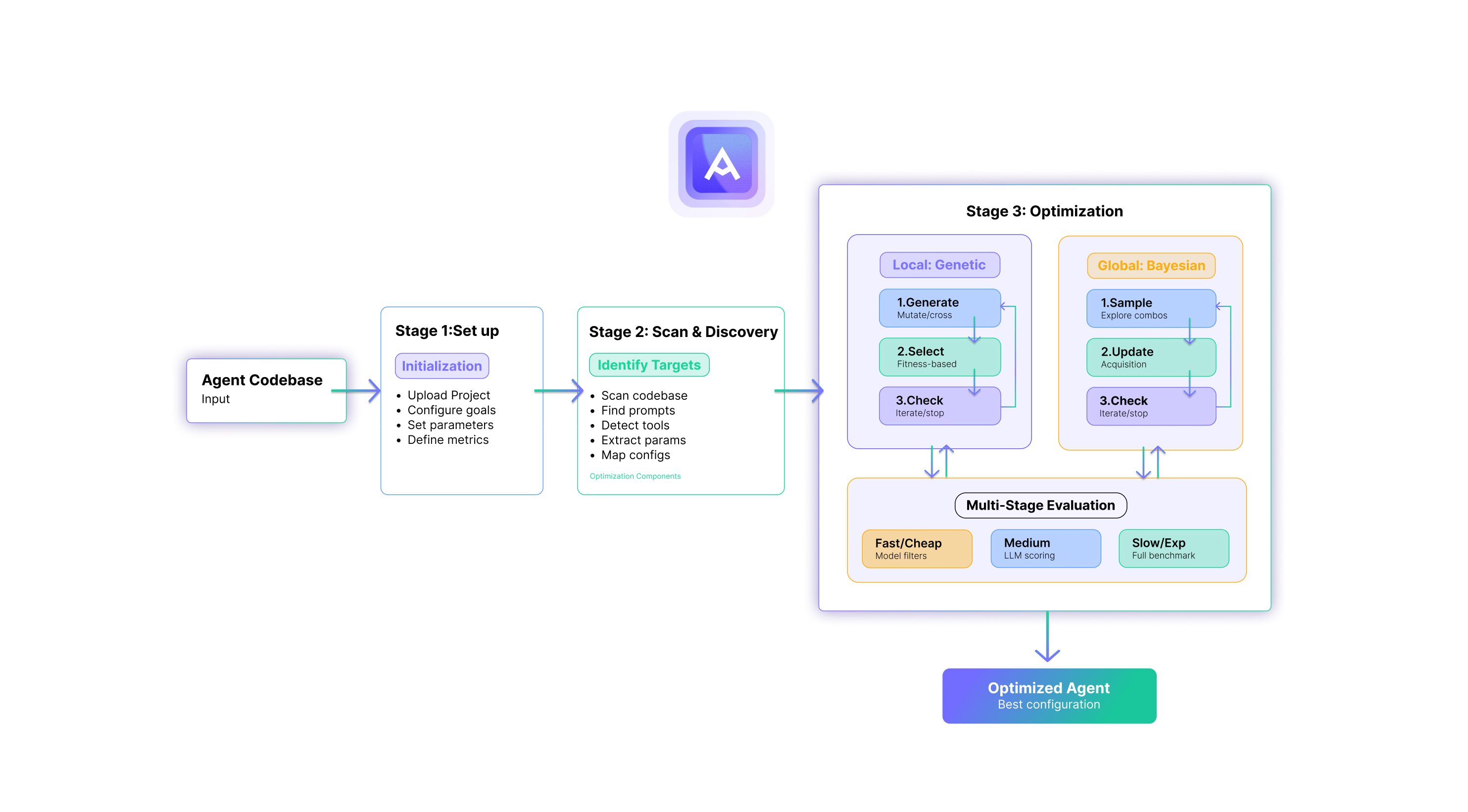

Artemis: Building a New Highway

Artemis wasn’t designed to pave old cowpaths; it’s engineered to build real highways for the AI era. It:

- Captures messy, high-level ideas and turns them into clear, adaptive plans — a persistent intent blueprint for developers and AI agents.

- Orchestrates a coordinated team of agents, centrally governed under robust guardrails, so enterprises gain transparency and control.

- Validates, optimizes, and learns in every cycle — surfacing the best solutions and ensuring results continually improve.

Artemis is built not just for the best-case scenario, but for the messy reality of AI. It assumes imperfection, expects fragility, and transforms it into resilience. In an agentic world where small cracks can become black holes, Artemis provides the highways that keep enterprises moving safely, reliably, and at scale.

Artemis applies AI not just to create more code, but to improve and govern it — evolving the code you have into the code you need.

The Road Ahead

The future of AI engineering, and of creation itself, won’t be built by paving the old roads. It will be built by designing new ones: agentic, adaptive, and aligned from the start.

In the second part of this series, we’ll look at how intelligent planning turns intent into the first true foundation for that road. Stay tuned.