The Art and Science of a Good Plan: Turning Requirements into Reliable Results

AI coding agents have made it easy to move from idea to prototype. But taking that prototype to production is a different challenge entirely. The difficulty isn’t generating code, it’s understanding what needs to be built, in what order, and how to verify that it actually works.

By now this problem is well known and many in the space of AI assisted software engineering are moving towards greater planning and specification as the solution. Without clear guidance, even capable agents make a mess: they delete tests, ignore edge cases, or build features that technically work but don't solve the actual problem. Prototyping with AI is a great experience, but the difference between that first step and having a system in production often comes down to a much bigger investment of time, learning, and refinement. It requires having a good plan.

But what makes a plan "good" for AI agents? This is a question our team has been actively exploring for a while. After reflecting on our own experiences and studying the work of others, we've identified four essential principles. These aren't abstract ideals - they're practical requirements that determine whether your AI-generated code ships or gets thrown away.

Principle 1: Clear Objectives

The Problem: Specification Gaming

"Build a dashboard" has no finish line. Your agent will build something, declare success, and move on. The result might look impressive in a demo but fail in production because you never defined what "working" actually means.

This isn't a new problem. Prior work on specification gaming shows how agents optimize for literal specifications without achieving intended outcomes. In one example, an agent playing a boat racing game learned to collect power-up items in circles rather than complete the race - it maximized points without winning. The agent satisfied the specification perfectly while completely missing the goal.

If you're a user of coding assistants you may have come across a similar problem in a different context. Does your AI assistant really want to solve your problem? Or is it just seeking your approval? If it's the later it may be more liable to try to move on early with a well-crafted and emoji-laden message declaring victory, despite the code still being broken.

Even when specifications are correct in simple contexts, goals often fail to generalize. An agent that learned good patterns from more well-studied examples, e.g. it may perform well on SWE-Bench, but when facing more diverse examples it is not able to perform as well.

The Solution: Testable Success Criteria

Clear objectives define what success looks like in ways you can actually verify:

Vague: "Build a dashboard" Clear: "Build a dashboard that displays project status, updates every 30 seconds via WebSocket, loads in under 2 seconds, and handles connection failures gracefully"

Vague: "Add authentication" Clear: "Implement JWT-based authentication with 15-minute token expiry, refresh token rotation, and middleware that returns 401 for invalid tokens"

The test is simple: can you write an automated test for it? Or at least, can you show it to a user and get a yes/no answer? If your objective is fuzzy, your implementation will be too.

This directly addresses specification gaming: when objectives include concrete validation criteria, agents can't optimize for narrow interpretations. The objective is the test. Our approach uses quantitative confidence scoring to determine when objectives are clear enough to proceed. Rather than relying on intuition about whether requirements are "good enough," we score definition clarity numerically. This transforms the subjective question "is this clear?" into a measurable threshold that gates progression to implementation.

Principle 2: Actionable Tasks

The Problem: Context Loss in Decomposition

Task decomposition is well-studied in planning research. Work on Tree of Thoughts showed that breaking problems into coherent intermediate steps improves LLM performance on complex tasks. Structure matters for problem-solving.

For planning systems, this translates to hierarchical task breakdown. We structure plans as trees with four levels: INITIATIVE → EPIC → TASK → SUBTASK, where each child must be a smaller task than its parent. This enforces that decomposition actually reduces scope at each level.

But structure alone isn't enough. A task like "improve the authentication system" might fit perfectly in your hierarchy as a TASK with SUBTASK children, yet still be completely unactionable. What files need changing? What does success look like? What dependencies exist? Without this context, developers (human or AI) waste time hunting for information or, worse, make changes without understanding the full picture.

The Solution: Self-Contained Task Specifications

Actionable tasks include everything needed to execute them:

Not actionable: "Improve authentication system"

Actionable:

Add password reset flow

Technical specs:

- Backend: POST /auth/reset-password endpoint accepting email

- Backend: POST /auth/reset-password/confirm accepting token + new password

- Frontend: Add reset password form with email validation

- Storage: Add password_reset_tokens table with 24h expiry

Implementation checklist:

- [ ] Create migration for password_reset_tokens table

- [ ] Implement token generation with crypto.randomBytes

- [ ] Add email service integration for token delivery

- [ ] Create reset endpoints with rate limiting (5 requests/hour)

- [ ] Add frontend form with email validation

- [ ] Add token validation and expiry checking

Success criteria:

- [ ] User can request reset and receive email with token

- [ ] Token expires after 24 hours

- [ ] Invalid/expired tokens return clear error messages

- [ ] Rate limiting prevents abuse

- [ ] Unit tests cover token generation, validation, expiry

Dependencies: Email service must be configured

Files to modify:

- src/auth/routes.ts

- src/auth/services/reset.ts

- src/models/PasswordResetToken.ts

- src/frontend/components/ResetPasswordForm.tsx

Notice how this task is self-contained: someone can pick it up tomorrow and make progress without hunting for context. The difference between a wish and a task is whether the next step is obvious.

This extends the research concept of coherent intermediate steps to include all necessary context: not just what to build, but where to build it, how to verify it, and what must happen first.

Principle 3: Validation Boundaries

The Problem: The Black Box Challenge

LLMs are fundamentally different from traditional software. As one testing guide puts it: "Unlike traditional software development where outcomes are predictable and errors can be debugged, LLMs are a black-box with infinite possible inputs and corresponding outputs."

Your agent might tell you "The task has been successfully completed! ✅" while the code doesn't compile, tests fail, or critical functionality is broken. Agents are trained to sound confident, not to be correct.

This has driven research into formal verification for AI systems. Recent work proposes bi-directional integration: using formal methods to verify LLM outputs and using LLMs to help with formal verification tasks. Other work like AgentGuard demonstrates runtime verification using dynamic probabilistic + assurance through online learning of Markov Decision Processes to verify agent actions as they execute.

But there's a gap between formal verification research and production engineering. Most teams don't have expertise in SMT solvers, formal specification languages, or automata theory.

The Solution: Pragmatic Validation

Define validation boundaries before writing code. These don't need to be formally verified - they need to be developer-readable and executable.

Validation through testability requirements: Every subtask must be testable in isolation. Not "should be" or "ideally" - must be. If you can't write a test for it, it's not a valid task. This forces specificity: you can't test "improve error handling," but you can test "invalid tokens return 401 with error message."

Validation through structured success criteria: Each task gets explicit checkboxes for verifiable outcomes:

Success Criteria:

- [ ] Middleware correctly validates JWT tokens

- [ ] Invalid tokens return 401 with clear error message

- [ ] Token expiry is enforced (tokens >15min old rejected)

- [ ] Unit tests cover valid tokens, expired tokens, malformed tokens

- [ ] Integration tests verify middleware integration with routes

This is less rigorous than formal verification but more practical. We're not formally proving agent behavior is correct - we're ensuring the plan includes concrete ways to check if implementation is correct.

This pragmatic approach aligns with work on making verification accessible to non-experts. You trade some formal rigor for developer accessibility and adoption.

Beyond pre-defined validation, production systems require runtime monitoring. We instrument our planning agents with an observability layer to capture agentic traces i.e. detailed logs of every decision, tool call, and output. This observability layer enables us to:

- Identify patterns in agent failures

- Debug unexpected behavior

- Continuously validate that agents follow intended planning workflows

- Gather data to improve confidence scoring and validation criteria

This combination of upfront validation boundaries and runtime observability bridges the gap between planning-time specifications and execution-time reality.

Principle 4: Replanning

The Problem: Plans vs. Reality

No plan survives contact with reality. Research on adaptive planning focuses on autonomous uncertainty detection: when should an agent replan based on model confidence? Recent work uses predictive uncertainty from Deep Ensemble models to dynamically adjust planning intervals, balancing error accumulation against computational cost.

But this assumes the right question is "when should the agent autonomously decide to replan?" For production systems, there's often a more important question: "how do humans and agents collaborate on replanning?"

Your initial plan might look perfect until:

- Implementation reveals unexpected codebase constraints

- A dependency is more complex than anticipated

- User priorities change after seeing progress

- Technical decisions need revision based on new information

Autonomous uncertainty-based replanning optimizes for the agent's decision-making. Human-collaborative replanning optimizes for the team's decision-making.

The Solution: Structured Replanning Workflows

Make plans living documents rather than write-once artifacts:

Before execution: Generate a natural language summary of the plan and ask: "Would you like to proceed with this plan?" This isn't just politeness - it's a forcing function to review the plan as a whole before committing resources.

Analyze intent: When users provide feedback, classify it: are they approving with minor notes (proceed) or requesting changes (replan)? This prevents misunderstandings where casual comments get interpreted as fundamental objections.

Preserve context during replanning: Reset confidence scores to force re-evaluation, but preserve all the codebase exploration and context gathered during planning. You want to revise the task structure, not rediscover the entire codebase.

Iterate until aligned: Generate plan → review → request adjustments → get revised version. Repeat until the plan reflects both technical reality and user priorities.

This is philosophically different from autonomous approaches. While some work on adaptive planning treats replanning as an optimization problem (when to replan to minimize cost while maintaining plan quality), we treat it as a collaboration feature: user provides feedback, system accommodates.

The tradeoff is intentional: you lose some autonomous adaptation but gain user control and trust. For production systems where humans are accountable for outcomes, control often matters more than autonomy.

Conclusion: Principles as Foundation

These four principles - clear objectives, actionable tasks, validation boundaries, and replanning - form the foundation for AI planning systems that bridge the gap from prototypes to production.

The problems are well-documented: specification gaming shows why vague objectives fail, work on task decomposition demonstrates the power of structure, formal methods prove validation is necessary, and adaptive planning shows plans must evolve.

But research and production have different constraints. Much research optimizes for autonomous agent performance; production often needs human-AI collaboration. Formal methods offer rigor; production needs pragmatic validation that teams can actually use.

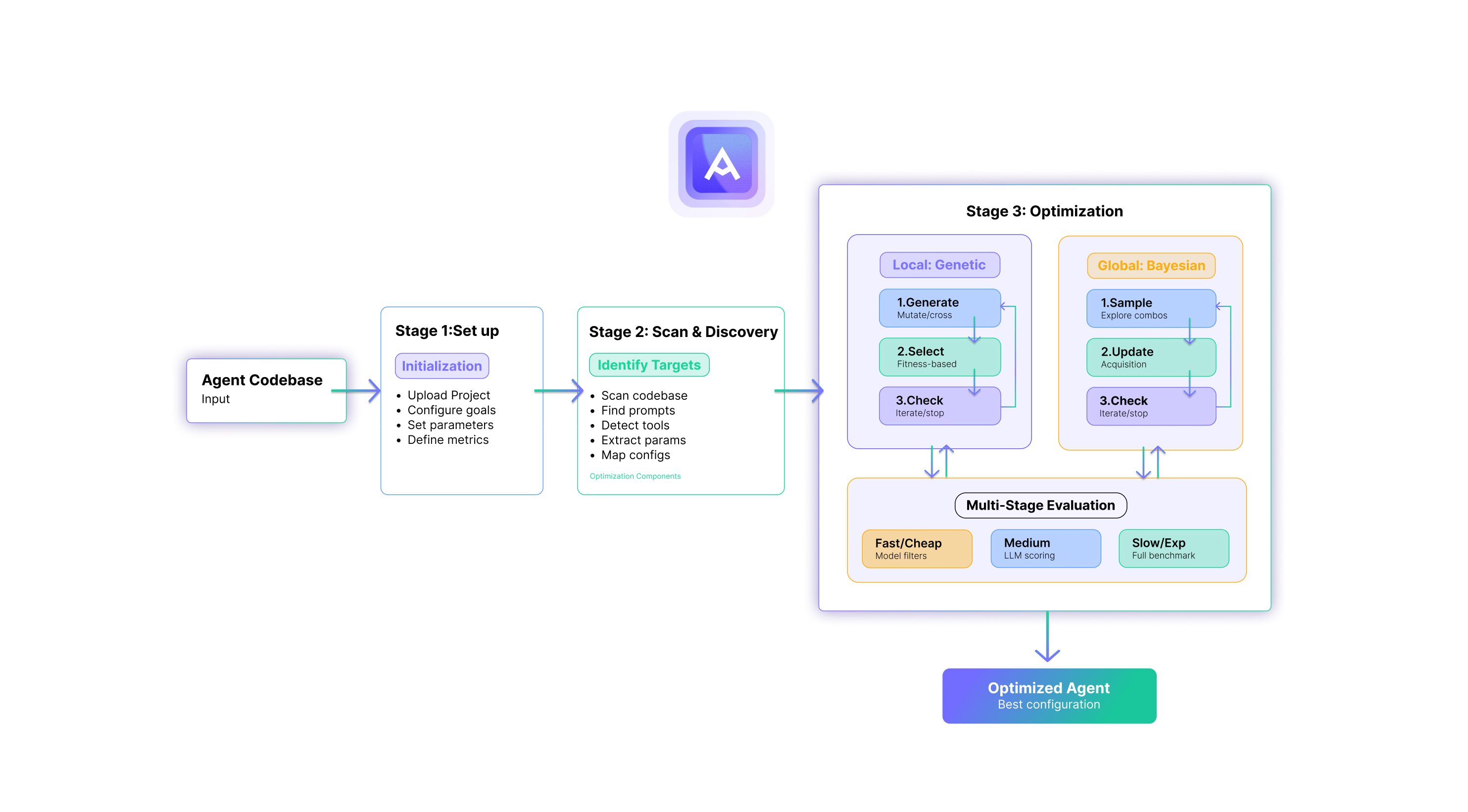

Building good plans for AI agents means adapting research insights to production reality, but it also requires novel mechanisms. At TurinTech, we built Artemis around these principles, so developers can make AI agents truly work toward their goals. Our approach includes:

- Quantitative confidence scoring to determine when objectives are clear enough to proceed, replacing vague intuition with measurable thresholds

- Runtime observability to capture agentic traces, enabling continuous validation and debugging of planning behavior

- Testability requirements that balance formal rigor with developer accessibility

- Human-collaborative replanning workflows that preserve context while accommodating changing requirements

These mechanisms emerged from building and monitoring Artemis in production, where we discovered that the gap between research principles and shipping features requires practical instrumentation, measurement, and human oversight.

In the next post, we'll explore how these principles translate into practice when building production planning systems.

The principles tell you what makes a good plan. The implementation tells you how to make one.

P.S. We’ve been hard at work turning Artemis into a true AI engineering partner. Soon, we’ll unveil the new agentic capabilities. Sign up to be among the first to try it out!