The DORA Trap: When Velocity Masks Risk

Generative AI tools are speeding up development, but they may be quietly degrading the very KPIs teams use to measure performance. Here’s how to avoid the DORA trap—and what engineering leaders should look for to ensure velocity doesn’t come at the cost of resilience.

Why Engineering Leaders Rely on DORA

The DORA framework—Deployment Frequency, Lead Time for Changes, Change Failure Rate, and Mean Time to Recovery (MTTR)—has become the gold standard for evaluating the health and performance of software delivery teams.

For platform leads, Heads of Engineering, and DevOps directors, these aren’t just stats, they’re the heartbeat of the org. They help teams measure real progress, balance speed with safety, and identify where engineering investments are paying off.

But something’s changed: with the rise of GenAI coding tools, it’s easier than ever to ship more code, faster. That doesn’t necessarily mean you’re improving DORA.

In fact, you might be doing the opposite.

How GenAI Can Degrade DORA Metrics

While tools like GitHub Copilot or Cursor help developers move faster, they can also introduce unvalidated, unoptimized, or redundant code into the pipeline—code that looks fine, but slows teams down in subtle, compounding ways.

This is what we call the DORA trap: the illusion of velocity that masks growing inefficiencies and operational risk.

Let’s look at each DORA metric and how GenAI can impact it.

Deployment Frequency

What it should measure: How often you successfully release to production.

The GenAI effect: More code is written, but confidence in the quality drops. AI-generated code often requires additional review, validation, or debugging. Teams hold back deployments or delay merges due to uncertainty about performance or correctness.

What to watch for:

- Slower PR cycles despite increased activity

- Test failures or late-stage rejections

- Staging bottlenecks or missed deploy windows

Takeaway: Without trust in the output, frequency doesn’t improve; it stalls.

Lead Time for Changes

What it should measure: The time from code commit to production release.

The GenAI effect: Code is created faster, but it often requires rework. Engineers spend more time reviewing, editing, and validating AI outputs. The bottleneck shifts from writing code to ensuring it’s safe to deploy.

What to watch for:

- Longer review queues

- Higher rate of PR comments or requested changes

- Inconsistent standards for AI-generated code

Data point: Enterprise teams report 20–25% longer code reviews for AI-generated pull requests due to performance and security concerns.

Takeaway: Speed at the top of the funnel doesn’t help if the rest of the pipeline slows down.

Change Failure Rate

What it should measure: The percentage of deployments causing a failure or requiring a rollback.

The GenAI effect: AI-generated code increases the risk of subtle bugs, edge-case failures, and hallucinated logic or dependencies. Without validation, more issues slip through to production.

What to watch for:

- Increase in incident reports post-deploy

- Rollbacks becoming more common

- Decreased trust in AI-written tests or business logic

Data point: A 2024 GitHub study found GenAI code introduced 41% more bugs than human-written code.

Takeaway: Fast code isn’t safe code, especially without guardrails.

Mean Time to Recovery (MTTR)

What it should measure: How long it takes to restore service after an incident.

The GenAI effect: When systems fail, AI-generated code, especially when lacking documentation or validation, can make it more difficult to diagnose the problem. Lack of context or observability adds drag to incident response.

What to watch for:

- More “mystery bugs” or unclear ownership

- Longer on-call investigations

- Gaps in logs, tests, or version history

Takeaway: Increased complexity from GenAI can slow recovery even when the code appears correct.

A Shift in Thinking: From Output to Outcome

If you’re measured on DORA metrics, GenAI can’t be treated as a shortcut. It needs to be part of a system that considers:

- Code quality and validation

- Optimization for performance, memory, and environment

- Alignment with standards across teams and repos

Engineering orgs that succeed with AI aren't just generating more code, they’re evolving it. They're using platforms that validate, benchmark, and refine GenAI output before it becomes technical debt.

How to Avoid the DORA Trap

To keep your DORA metrics moving in the right direction, ask:

- Are we reviewing more, not less, since adopting AI tools?

- Are PRs with GenAI code taking longer to be approved or merged?

- Do we have validation or optimization steps before staging?

- Is incident response getting harder?

If any of these ring true, it’s worth reassessing how AI fits into your DevOps workflow.

If You're Looking for a Safety Net

Tools like GitHub Copilot and ChatGPT are here to stay. The key isn’t replacing them, it’s complementing them with platforms that evolve their output into something trusted, measurable, and aligned with your goals.

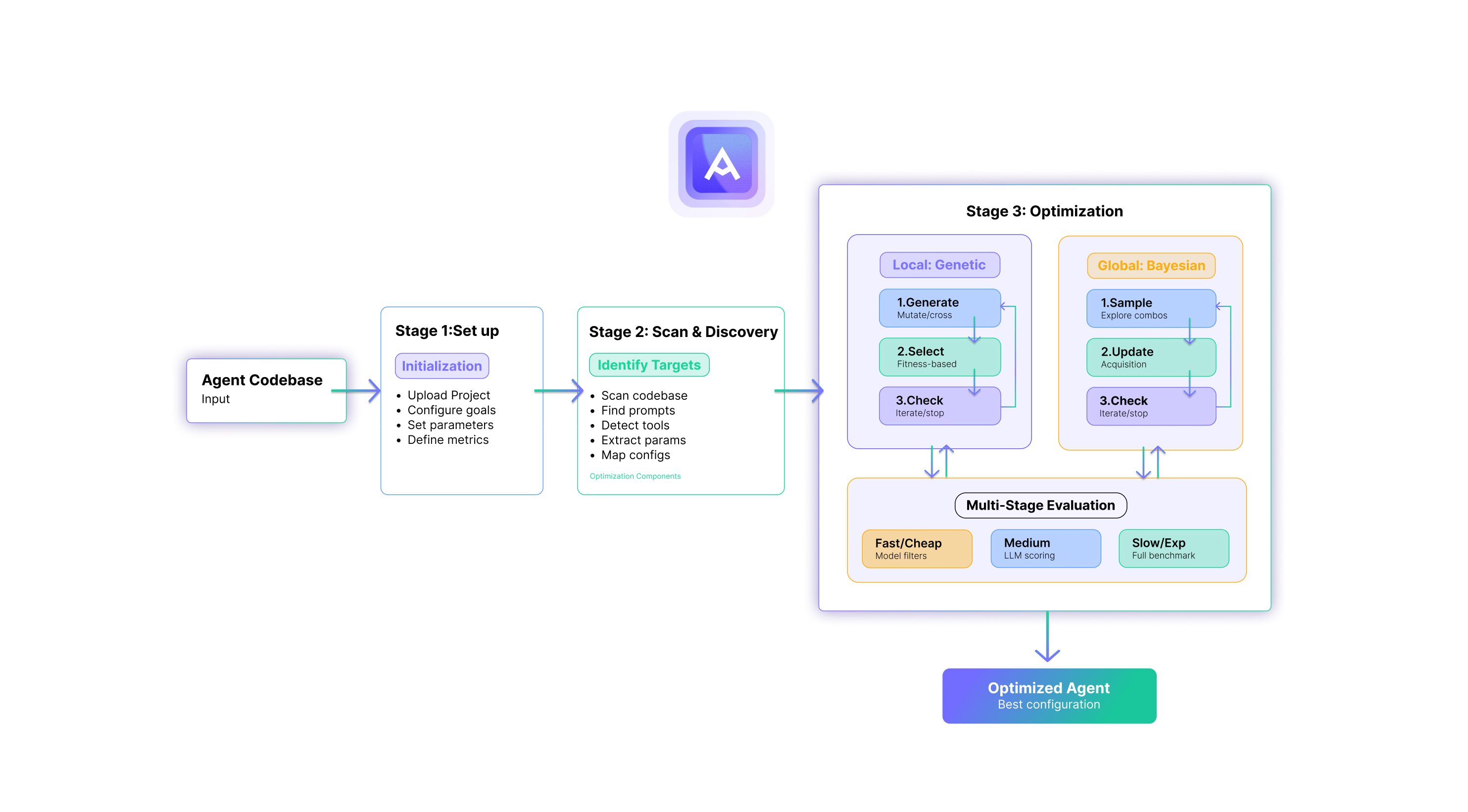

That’s what Artemis is built for.

It’s an intelligence engine that integrates into your CI/CD flow to analyze, optimize, and validate GenAI, legacy, or human-written code—so you can accelerate and improve your DORA metrics, not just chase them.