From Code Generation to Intelligent Systems: Evolving AI by Design

Generative AI is no longer a question of if—it’s a question of how. Enterprises across industries are moving quickly from pilots to production, eager to automate workflows, accelerate development, and unlock innovation. But as adoption scales, so do the cracks. What begins as rapid AI code generation often collapses under real-world complexity—ballooning costs, inconsistent output, and rising technical debt. The problem isn’t the model. It’s the absence of systems that can guide, optimize, and evolve AI toward business outcomes.

Early results can be impressive: faster code generation, content creation, and automation across tasks. But once these systems face production-scale complexity, their limitations become clear. Outputs that seem correct can misfire in deployment. Pipelines break. Costs spike. Teams spend more time reviewing, debugging, and adapting GenAI output than they save on generating it. Instead of accelerating progress, AI often introduces new friction, or worse, new risk.

Gartner has flagged a growing pattern: by the end of 2025, at least 30% of GenAI projects are expected to be abandoned after the proof-of-concept phase, often due to poor data quality, unclear value, or inadequate risk controls. And by 2027, more than 40% of agentic AI projects could be cancelled for similar reasons, including rising costs and governance failures. The message is clear: without the right guardrails, many enterprise AI initiatives won’t make it past experimentation.

Executives are recognizing the pattern. Usage scales, but value doesn’t always follow. Teams report spending more time reviewing and debugging GenAI output than writing from scratch. Hallucinations persist. Formats drift. Pipelines break under pressure. And while model innovation continues at breakneck speed, the core challenge isn’t just the quality of generation, it’s the absence of systems that help organizations steer, adapt, and sustain it.

What’s missing is a system that drives the desired outcome

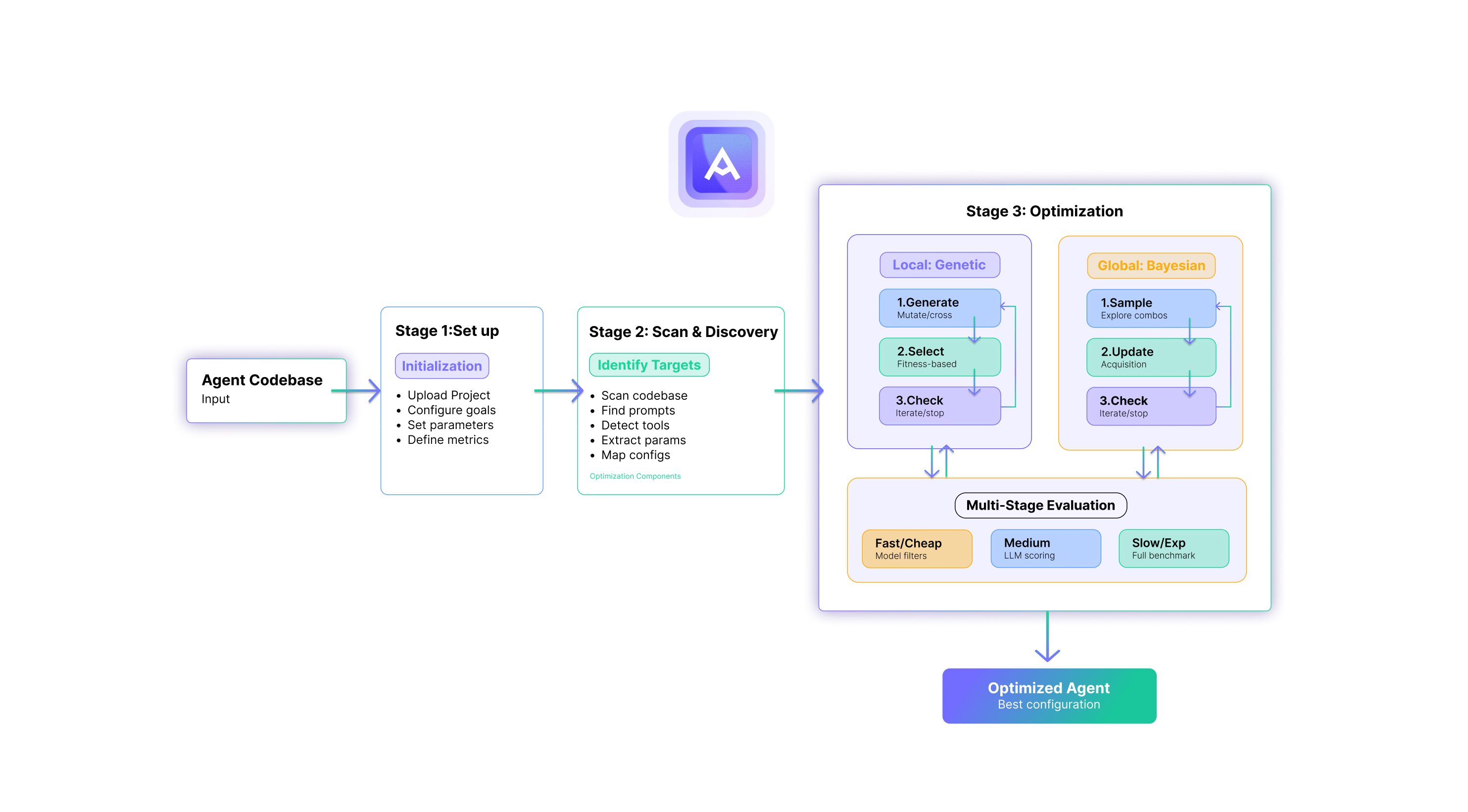

To unlock GenAI’s potential at scale, enterprises need more than models. They need systems that don’t just generate output, but that can plan, optimize, and adapt AI behavior based on evolving business goals.

That shift is already underway. We’re moving beyond one-shot prompting into agentic planning, designing AI workflows that can reason through how to approach a task, not just what to produce. These workflows need to incorporate logic, memory, and real-time decision-making based on context, constraints, and priorities.

Importantly, this also introduces the need for multi-objective optimization, a concept borrowed from data science that takes on new importance in enterprise AI.

At its core, multi-objective optimization means balancing multiple goals at once, not just optimizing for one metric like speed, but simultaneously improving performance, cost-efficiency, security, and compliance.

In financial services, for example, a trading algorithm can’t just be the fastest, it also needs to minimize risk exposure, meet regulatory requirements, and run within budgeted infrastructure. Optimizing for one without the others leads to imbalance, and ultimately, failure.

In practice, multi-objective optimization requires systems that can:

- Capture desired outcomes and translate them into prompts/AI/agent evaluation criteria

- Plan and orchestrate agentic workflows that adapt in real time to business goals, constraints, and feedback

- Evaluate outputs for adaptive re-planning to ensure desired outcome

- Self evolving to improve the system’s own learning and optimization process overtime

This kind of structured intelligence doesn’t just improve efficiency, it reduces risk. Without embedded feedback loops and validation, AI can produce outputs that look right but fail silently, introducing bugs, compliance violations, or downstream performance issues. By defining success criteria up front and building them into how AI tasks are executed, organizations move from reacting to errors to engineering confidence into the system itself.

That’s why outcome-based design is critical. It’s not enough to generate plausible answers, you need to start with the outcomes that matter and work backward. Whether the goal is faster execution, lower cloud cost, regulatory compliance, or scalability, those targets must guide the way models are prompted, how workflows are constructed, and how outputs are evaluated. Multi-objective optimization brings this to life, aligning generation and refinement around measurable, business-specific priorities.

To do this well, enterprises need something that sits above the models, a coordination layer that can plan, evaluate, and adapt AI behavior in context. It’s not about replacing developers or picking the “best” LLM. It’s about surrounding those models with logic, memory, and feedback systems that guide AI toward real, measurable outcomes. This is how you move from AI that simply generates, to AI that reliably delivers.

And because this approach is model-agnostic, it doesn’t rely on any single provider. Instead, it builds a flexible intelligence framework, one that can benchmark, evaluate, and optimize across models as the landscape evolves—ensuring that infrastructure, not just the model, drives long-term performance.

The shift underway is strategic, not technical.

It reflects a broader truth about enterprise AI: real transformation happens not when systems generate more, but when they generate better, smarter, and with clear alignment to business goals. The most successful organizations won’t be those with the largest AI budgets, they’ll be the ones that design for adaptability, validation, and long-term value creation.

As AI adoption matures, the shortcut era is ending. Leaders are realizing that unchecked generation often creates more mess than momentum. The next phase isn’t about more AI, it’s about building intelligent platforms that scale AI responsibly, deliver real outcomes, and evolve with your business.

Not more AI but better AI, by design.